4. Running Tensor-cell2cell to perform a tensor factorization

After generating a 4D-Communication Tensor, we can run Tensor-cell2cell to perform a tensor factorization and obtain the context-driven patterns of cell-cell communication (factors).

This tutorial will show you how to load a previously exported 4D-Communication Tensor and run Tensor-cell2cell on this tensor.

Initial Setup

Enable GPU use as discussed in Tutorial 03

[1]:

gpu_use = TRUE

library(reticulate, quietly = TRUE)

if (gpu_use){

device <- 'cuda:0'

tensorly <- reticulate::import('tensorly')

tensorly$set_backend('pytorch')

}else{

device <- NULL

}

Import Libraries

[2]:

library(liana, quietly = TRUE)

library(dplyr, quietly = TRUE)

library(tidyr, quietly = TRUE)

library(magrittr, quietly = TRUE)

library(tibble, quietly = TRUE)

library(ggplot2, quietly = TRUE)

library(Seurat, quietly = TRUE)

Attaching package: ‘dplyr’

The following objects are masked from ‘package:stats’:

filter, lag

The following objects are masked from ‘package:base’:

intersect, setdiff, setequal, union

Attaching package: ‘magrittr’

The following object is masked from ‘package:tidyr’:

extract

Attaching SeuratObject

Seurat v4 was just loaded with SeuratObject v5; disabling v5 assays and

validation routines, and ensuring assays work in strict v3/v4

compatibility mode

We will use reticulate to run Tensor-cell2cell in R

[3]:

use_condaenv('ccc_protocols')

c2c <- reticulate::import(module = "cell2cell")

Directories

[4]:

output_folder <- file.path('..', '..', 'data', 'tc2c-outputs')

Load Tensor

Now, we can load our 4D-Communication Tensor.

[5]:

tensor <- reticulate::py_load_object(filename = file.path(output_folder, 'BALF-Tensor-R.pkl'))

Perform Tensor Factorization

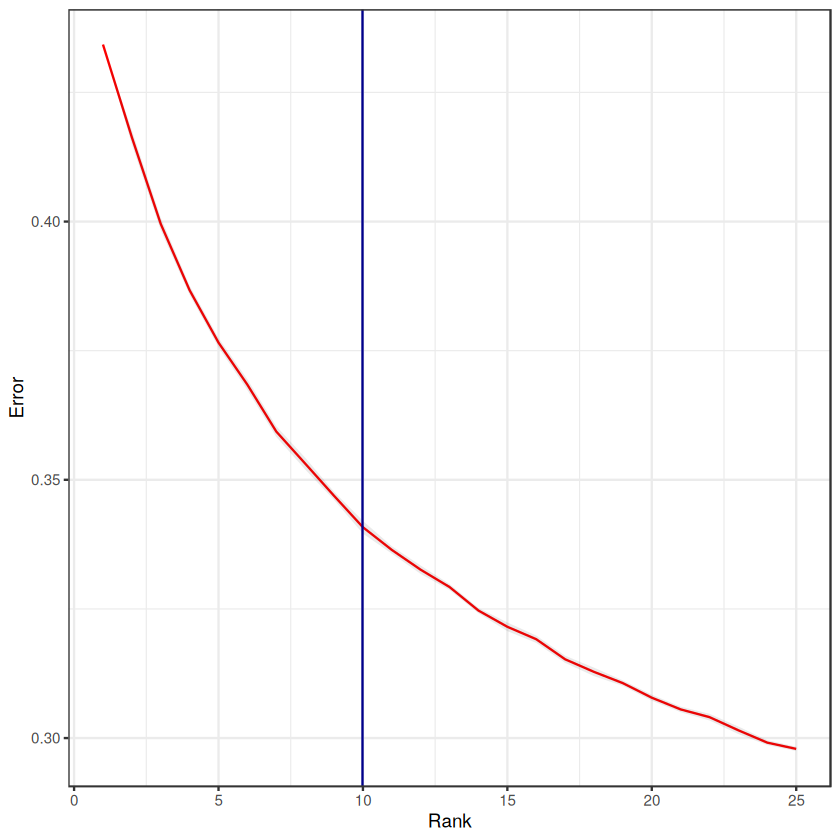

The above function to executes two important steps to perform the tensor factorization: elbow analysis to select the rank and decomposition to estimate the low-rank tensor. For additional details on these two steps, please see the Supplementary Section of the companion Python tutorial.

Key parameters are:

rankis the number of factors or latent patterns we want to obtain from the analysis. You can either indicate a specific number or leave it asNULLto obtain a suggested number through an elbow analysis.tf_optimizationindicates whether running the analysis in the'regular'or the'robust'way. The regular way means that the tensor decomposition is run 10 times per rank evaluated in the elbow analysis, and 1 time in the final decomposition. Additionally, the optimization algorithm has less number of iterations in the regular than the robust case (100 vs 500) and less precision (tolerance of 1e-7 vs 1e-8). The robust case runs the tensor decomposition 20 times per rank evaluated in the elbow analysis, and 100 times in the final decomposition. Here we could use thetf_optimization='regular', which is faster but generates less robust results. We recommend usingtf_optimization='robust, which takes longer to run (more iteractions and more precise too). It is important to notice that multiple runs of a tensor decomposition differ in the initialization values (regardless of usingtf_init='random'ortf_init='svd').seedis the seed for randomization. It controls the randomization used when initializing the optimization algorithm that performs the tensor decomposition. It is useful for reproducing the same result every time that the analysis is run. IfNULL, a different randomization will be used each time.'elbow_metric'is the metric to perform the elbow analysis to obtain a suggested number of factors. Options are'error'and'similarity', indicating respectively the error of tensor reconstruction, and the similarity of tensor factorization across multiple runs for a given rank. The similarity metric may result in non-smooth curves given the highly variability especially at higher ranks. If so, we recommend using the parametersmooth_elbow=True.uppper_rankis the max number of ranks to try in the elbow analysis.initis the initialization of the tensor decomposition algorithm. If your tensor contains a mask (tensor.mask), it will automatically useinit='random'. Options are'random'and'svd'. The latter helps to obtain results that are more close to a global optima from the optimization method behind the algorithm.svdis the method to perform the SVD to initialize the tensor factorization. This is only considered wheinit='svd'.

[6]:

tensor <- liana::decompose_tensor(tensor = tensor,

rank = NULL, # Number of factors to perform the factorization. If NULL, it is automatically determined by an elbow analysis

tf_optimization = 'robust', # To define how robust we want the analysis to be.

seed = 0, # Random seed for reproducibility

elbow_metric = 'error', # Metric to use in the elbow analysis

smooth_elbow = FALSE, # Whether smoothing the metric of the elbow analysis

upper_rank=25, # Max number of factors to try in the elbow analysis

init = 'random', # Initialization method of the tensor factorization

svd = 'numpy_svd', # Type of SVD to use if the initialization is 'svd'

factors_only = FALSE,

)

Estimating ranks...

Decomposing the tensor...

[7]:

print(paste0('The estimated tensor rank is: ', tensor$rank))

[1] "The estimated tensor rank is: 10"

Let’s see how the automated elbow analysis arrived at its rank estimation:

[8]:

# Estimate standard error

error_average <- tensor$elbow_metric_raw %>%

t() %>%

as.data.frame() %>%

mutate(rank=row_number()) %>%

pivot_longer(-rank, names_to = "run_no", values_to = "error") %>%

group_by(rank) %>%

summarize(average = mean(error),

N = n(),

SE.low = average - (sd(error)/sqrt(N)),

SE.high = average + (sd(error)/sqrt(N))

)

# plot

error_average %>%

ggplot(aes(x=rank, y=average), group=1) +

geom_line(col='red') +

geom_ribbon(aes(ymin = SE.low, ymax = SE.high), alpha = 0.1) +

geom_vline(xintercept = tensor$rank, colour='darkblue') + # rank of interest

theme_bw() +

labs(y="Error", x="Rank")

Next, we can visualize what the factors look like.

LIANA has a helper function to visualize the factors. However, it requires a SingleCellExperiment object as input to access the associated metadata. So, let’s load our Seurat object, convert it to a SingleCellExperiment Object, and format it for visualization the tensor decomposition:

[9]:

# load processed data with liana results from 02-Infer-Communication-Scores.ipynb

covid_data <- readRDS(file.path('..', '..', 'data', 'covid_balf_norm.rds'))

# format

# formatted.factors<-format_c2c_factors(tensor$factors)

# saveRDS(formatted.factors, paste0(output_folder, 'Loadings.rds'))

# covid_data@metadata$tensor_res<-formatted.factors

covid_data@metadata$tensor_res <- liana:::format_c2c_factors(tensor$factors)

Save data for downstream analyses

[10]:

saveRDS(covid_data, file.path('..' , '..', 'data', 'covid_data_sce.rds'))

[11]:

h_ = 13

w_ = 15

options(repr.plot.height=h_, repr.plot.width=w_)

plot_c2c_overview(sce = covid_data, group_col = 'condition', sample_col = 'sample_new')

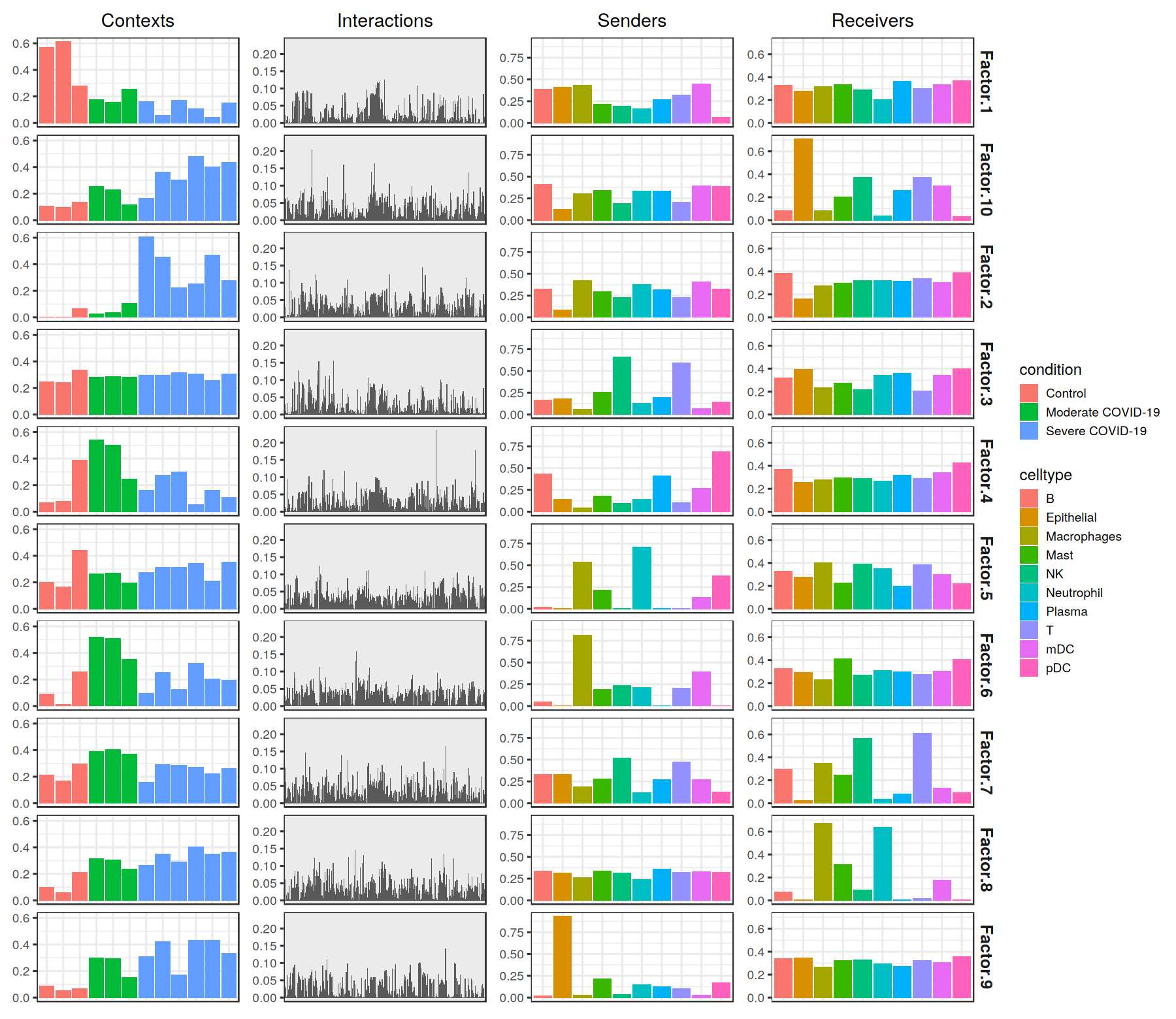

Factorization Results

After running the decomposition, the results are stored in the factors attribute (tensor$factors). This attribute is a dictionary (named list) containing the loadings for each of the elements in every tensor dimension. Keys are the names of the different dimension.

[12]:

names(tensor$factors)

- 'contexts'

- 'interactions'

- 'senders'

- 'receivers'

We can inspect the loadings of the samples, for example, located under the key 'Contexts'.

[13]:

tensor$factors[['contexts']]

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 | Factor 6 | Factor 7 | Factor 8 | Factor 9 | Factor 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | |

| HC1 | 0.57167751 | 0.0040322351 | 0.2486676 | 0.06951163 | 0.1992737 | 0.09138259 | 0.2109948 | 0.09901118 | 0.08743461 | 0.10624311 |

| HC2 | 0.61345816 | 0.0009374141 | 0.2422200 | 0.07904458 | 0.1662704 | 0.01086045 | 0.1696340 | 0.05779721 | 0.05216325 | 0.09475538 |

| HC3 | 0.28084552 | 0.0640010983 | 0.3343133 | 0.38925144 | 0.4410195 | 0.25950903 | 0.2944204 | 0.21048699 | 0.06730253 | 0.13640665 |

| M1 | 0.17811552 | 0.0243369564 | 0.2788751 | 0.54256505 | 0.2649768 | 0.51976120 | 0.3917096 | 0.31559205 | 0.29760960 | 0.25299737 |

| M2 | 0.15766080 | 0.0367470123 | 0.2851860 | 0.50009239 | 0.2689672 | 0.50643295 | 0.4055026 | 0.30275965 | 0.29582947 | 0.22803977 |

| M3 | 0.25531387 | 0.1038309112 | 0.2800323 | 0.24746110 | 0.1943755 | 0.35284221 | 0.3694525 | 0.23726170 | 0.15349986 | 0.11353971 |

| S1 | 0.16227683 | 0.6068011522 | 0.2968922 | 0.16380185 | 0.2758042 | 0.09672241 | 0.1598114 | 0.26295593 | 0.31008309 | 0.16405572 |

| S2 | 0.05848442 | 0.4525174201 | 0.2955828 | 0.27341583 | 0.3142951 | 0.25263739 | 0.2916600 | 0.35102302 | 0.42103592 | 0.36295748 |

| S3 | 0.17109768 | 0.2214141041 | 0.3145112 | 0.29934934 | 0.3125799 | 0.12573658 | 0.2879774 | 0.29225123 | 0.16905822 | 0.30484933 |

| S4 | 0.10837201 | 0.2505245507 | 0.3078077 | 0.05209281 | 0.3427818 | 0.32328588 | 0.2711394 | 0.40252998 | 0.43096238 | 0.47937286 |

| S5 | 0.04310614 | 0.4705861211 | 0.2580555 | 0.16272153 | 0.2119863 | 0.20446612 | 0.2239244 | 0.35042289 | 0.43328699 | 0.40211794 |

| S6 | 0.15356158 | 0.2773903906 | 0.3073083 | 0.10633880 | 0.3504498 | 0.19429857 | 0.2616548 | 0.36386305 | 0.33164164 | 0.43490598 |

If we are interested in the top elements of a given dimension, here for example 'interactions', we can easily obtain them:

[14]:

tensor$get_top_factor_elements(order_name='interactions',

factor_name='Factor 10',

top_number=as.integer(10))

- CALM2^PDE1B

- 0.202606901526451

- HLA-C^LILRA1

- 0.164520159363747

- CSF1^CSF2RA

- 0.159353837370872

- HLA-A^LILRA1

- 0.139374673366547

- TIGIT^NECTIN2

- 0.13768395781517

- CD70^TNFRSF17

- 0.12326680123806

- ICAM1^ITGAM_ITGB2

- 0.104458123445511

- HLA-B^LILRA1

- 0.10074632614851

- PLAU^ST14

- 0.0999555811285973

- LGALS9^MET

- 0.097864530980587

Supplementary Information about the Tensor Decomposition

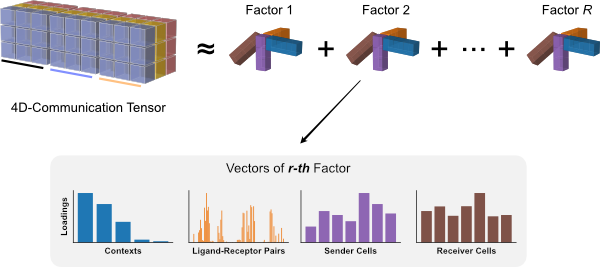

The tensor decomposition performed here is a non-negative canonical polyadic decomposition (CPD) (same performed in the elbow analysis). Briefly, tensor decomposition identifies a low-rank tensor (here, a rank of 10) that approximated the full tensor. This low-rank tensor can be represented as the sum of a set of rank-1 tensors (10 of them in this case). Each rank-1 tensor represents a factor in the decomposition and can be further represented as the outer product of n vectors, where n represents the number of tensor dimensions. Each vector represents one of the n tensor dimensions for that factor and its values, corresponding to individual elements in each dimension, represent the factor loadings. In our case, each factor will contain loadings for the context, LR pair, sender-cell, and receiver-cell dimensions. Those elements within each factor that contain high loadings contribute to the factor-specific communication pattern.

Pipeline

To combine the tensor building from Tutorial 03 with the rank selection and decomposition steps from this tutorial, we can run the liana_tensor_c2c function while setting the build_only argument to FALSE:

First, preprocess the context_df_dict variable as in Tutorial 03:

[15]:

# preprocess.scores<-function(context_df_dict,

# score_col = "LRscore",

# sender_col = "source", receiver_col = "target",

# ligand_col = "ligand.complex", receptor_col = "receptor.complex",

# outer_fraction = 0, invert = TRUE, non_negative = TRUE, non_negative_fill = 0

# ){

# source.cells<-sapply(context_df_dict, function(x) x[[sender_col]])

# target.cells<-sapply(context_df_dict, function(x) x[[receiver_col]])

# all.cells.persample<-sapply(names(source.cells),

# function(sample.name) union(source.cells[[sample.name]], target.cells[[sample.name]]))

# all.cells<-Reduce(union, unname(unlist(all.cells.persample)))

# cell.counts.persample<- sapply(all.cells,

# function(ct) length(which(sapply(all.cells.persample, function(x) ct %in% x))))

# cells.todrop<-names(which(cell.counts.persample < (outer_fraction*length(context_df_dict))))

# lrs.persample<-sapply(context_df_dict, function(x) paste(x[[ligand_col]], x[[receptor_col]],

# sep = '^'))

# all.lrs<- Reduce(union, unname(unlist(lrs.persample)))

# lr.counts.persample<-sapply(all.lrs, function (lr) length(which(sapply(lrs.persample, function(x) lr %in% x))))

# lrs.todrop<-names(which(lr.counts.persample < (outer_fraction*length(context_df_dict))))

# for (sample.name in names(context_df_dict)){

# ccc.sample<-context_df_dict[[sample.name]]

# if (invert){ccc.sample[[score_col]]=(1- ccc.sample[[score_col]])}# invert score

# if (non_negative){ccc.sample[[score_col]][ccc.sample[[score_col]] < 0] = non_negative_fill} # make non-negative

# # apply the outer_frac parameter

# ccc.sample[(!(ccc.sample[[sender_col]] %in% cells.todrop)) &

# (!(ccc.sample[[receiver_col]] %in% cells.todrop)),]

# ccc.sample<-ccc.sample[!(paste(ccc.sample[[ligand_col]], ccc.sample[[receptor_col]],

# sep = '^') %in% lrs.todrop),]

# context_df_dict[[sample.name]]<-ccc.sample

# }

# return(context_df_dict)

# }

# data_folder = '/data/hratch/ccc_protocols/interim/liana-outputs/'#'../../data/liana-outputs/' # <--replace in final version

# env_name = 'ccc_protocols' # conda environemnt created by ../../env_setup/setup_env.sh

# context_df_dict<-readRDS(paste0(data_folder, 'LIANA_by_sample_R.rds'))

# context_df_dict<-preprocess.scores(context_df_dict, outer_frac = 1/3,

# invert = TRUE, non_negative = TRUE, non_negative_fill = 0)

[16]:

# tensor <- liana_tensor_c2c(context_df_dict = context_df_dict,

# # build args

# sender_col = "source", # Column name of the sender cells

# receiver_col = "target", # Column name of the receiver cells

# ligand_col = "ligand.complex", # Column name of the ligands

# receptor_col = "receptor.complex", # Column name of the receptors

# score_col = 'LRscore', # Column name of the communication scores to use

# how='outer', # What to include across all samples

# lr_fill = NaN, # What to fill missing LRs with

# cell_fill = NaN, # What to fill missing cell types with

# lr_sep='^', # How to separate ligand and receptor names to name LR pair

# context_order=sorted_names, # Order to store the contexts in the tensor

# sort_elements = TRUE, # Whether sorting alphabetically element names of each tensor dim. Does not apply for context order if context_order is passed.

# conda_env = env_name, # used to pass an existing conda env with cell2cell

# # decomposition args

# build_only = FALSE, # set this to FALSE to combine the downstream rank selection and decomposition steps all here

# device = device # Device to use when backend is pytorch.

# rank = NULL,

# seed = 0,

# init = 'random',

# upper_rank = 25,

# runs = 3,

# factors_only = FALSE,

# inplace = FALSE

# )

Subset of a Tensor

From this analysis we may also be interested in a subset of the original tensor to inspect the cell-cell communication of a subset of samples, LR pairs, sender cells, or receiver cells.

We can subset the original tensor without having to build it from the beginning. For example, we are interested in the CCC of NKs and T cells as the senders, and B cells, Macrophages, mDC and pDC cells as receivers. We can do so by creating a dictionary specifying the elements of the dimensions that we want to subset. Here, the dimensions corresponds to: - 0 for the Contexts - 1 for the Ligand-Receptor Pairs - 2 for the Sender Cells - 3 for the Receiver Cells

If we do not include a dimension in the following dictionary, that dimension will not be subset.

[17]:

sender_subset = list('NK', 'T')

receiver_subset = list('B', 'Macrophages', 'mDC', 'pDC')

subset_dict<-reticulate::py_dict(keys = c(as.integer(2), as.integer(3)),

values = list(sender_subset, receiver_subset))

subset_dict

{2: ['NK', 'T'], 3: ['B', 'Macrophages', 'mDC', 'pDC']}

Some key parameters here are:

original_orderindicates whether keeping the element order as in the original tensor or as in the subset list.remove_duplicatesindicates whether removing duplicate elements in the subset lists. Duplicates can be introduced especially when passing these lists manually.keepindicates what duplicated element to keep. Options are:‘first’ : Drop duplicates except for the first occurrence.

‘last’ : Drop duplicates except for the last occurrence.

False : Drop all duplicates.

[18]:

subset_tensor = c2c$tensor$subset_tensor(interaction_tensor=tensor,

subset_dict=subset_dict,

original_order=TRUE)

If we compare the size of the original tensor with the subset one, we can see that we only modified the senders and receiver cells.

[19]:

print(tensor$shape)

print(subset_tensor$shape)

torch.Size([12, 1054, 10, 10])

torch.Size([12, 1054, 2, 4])